This is part-2 of the "EnchantingProgram" post, read this post first:

http://magical-parallel-computing.blogspot.in/2017/04/a-simple-python-program-using.html

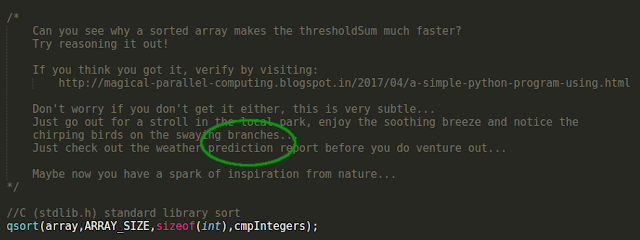

So, let's see the actual reason of the speedup in the C and C++ programs. Lo and Behold, it is the effect of Branch Prediction!

Surprised? Well, at least my comments in the programs should have given you some direction!!!

The if condition leads to a branch in the control flow. We know that branch predictions lead to pipeline flushes and create a delay in the piped execution scheme.

Modern microprocessors utilize complex run-time systems for throughput, execution speed and memory efficiency. One example of such a technique is dynamic branch prediction.

A long time ago, microprocessors used only a basic technique called static branch prediction, with two general rules:

- A forward branch is presumed to be not taken

- A backward branch is presumed to be taken

Now, static branch prediction is used in simple cases and when logging data is not available initially for the dynamic prediction.

The conditional branches once fetched into the pipeline, might become a liability if they evaluate to false, as they require a flush of an entire pipeline phase. To avoid this, microprocessors might use continuous logging of conditional statement results to predict whether a branch is taken or not.

If the prediction is wrong, then there will be an overhead. Nevertheless, this is definitely better than static branch prediction. Note that, dynamic nature of prediction adds complexity to the design of pipelines and instruction sets.

Now you might understand the magic happening behind the curtains of the language run-time. Dynamic branch predictors essentially learn patterns in the input to reduce the number of wrong branch predictions and thus pipeline flushes. They do so by using a simple data structure called a Branch Target Buffer (BTB). The concept such as loop unrolling are designed according to the length of the BTB to prevent higher probability of wrong predictions.

It is this dynamic prediction that lead to problems when random number comparisons were made. The non-existent branch patterns were assumed by the BTB, leading to lots of wrong predictions and pipeline stalling! So, when the array was sorted and then comparisons were made on each element of the array, the dynamic branch predictor gets the pattern of repeated Boolean values.(given the <256 condition and sorting being ascending order: first all true, then all false).

Subtle yet so simple. WAIT, we're not done yet!

I wanted to wrap up this post with the revelation of branch prediction, but then I tried the same program with Python3.

Lo and behold, for the second time, the speedup is very minimal or sometimes even negative! All the branching behaviour at the microprocessor/hardware level we discussed about, is not applicable to Python? Of course it is!

There is another influencing factor here. I took a few hours of reading Python blogs and language documentation to understand why. Can you figure it out?

Don't worry, I won't fork another thread this time to hide the spoiler this time!

It has to do with the design of programming languages. C was mainly designed for system software development. The main focus of the C language was to uphold code efficiency and portability. Python, on the other hand, is an easy-to-comprehend scripting language, with multiple object-oriented and functional programming paradigm features.

It all depends on the way in which the language maintains the data objects in memory. In C, almost all of the libraries are stored in either the stack or the heap. In Python, all datatypes and functions are first class objects and the concept of reference holds equally well for them as they do for regular objects.

C/C++ defines integer as a primitive data-type, which incidentally uses 4 bytes of memory on a standard x64 machine. However, in Python, the integer datatype is actually predefined class which has the following structure:

Due to the fact that Python objects are automatically managed on the heap using the concept of reference count, there will be rearrangement problems with operations such as sorting, So, when sorting an array, only the references to the integer objects are manipulated. The heap references if non-contiguous, will lead to lots of cache misses.

The array.sort() method call just juggles around the array element references in place, to order the elements using some adaptive sorting algorithm. So, sorting might lead to non-contiguous locations which hurt the spatial locality of reference that caches rely on.So, an example such as sorting an existing randomized array using array.sort() leads to caching problems while traversing the array.

It all depends on the way in which the language maintains the data objects in memory. In C, almost all of the libraries are stored in either the stack or the heap. In Python, all datatypes and functions are first class objects and the concept of reference holds equally well for them as they do for regular objects.

C/C++ defines integer as a primitive data-type, which incidentally uses 4 bytes of memory on a standard x64 machine. However, in Python, the integer datatype is actually predefined class which has the following structure:

typedef struct {

PyObject_HEAD;

long ob_ival;

} PyIntObject;

Due to the fact that Python objects are automatically managed on the heap using the concept of reference count, there will be rearrangement problems with operations such as sorting, So, when sorting an array, only the references to the integer objects are manipulated. The heap references if non-contiguous, will lead to lots of cache misses.

The array.sort() method call just juggles around the array element references in place, to order the elements using some adaptive sorting algorithm. So, sorting might lead to non-contiguous locations which hurt the spatial locality of reference that caches rely on.So, an example such as sorting an existing randomized array using array.sort() leads to caching problems while traversing the array.

The above caching problem attenuates the speedup provided by the sorting (which we did to improve branch prediction). The resultant execution time varies, but the speedup, if any, is very little.

This is my reasoning behind the enchanting programs in C,C++ and Python3. Please feel free to convey your ideas/answers to me.

See you in the next post!

Comments

Post a Comment